Today I set up sonarr on my TrueNAS machine. I thought it will just work as same as my radarr instance, but end up I get the following error when I try to add new series: Unable to communicate with SkyHook.

I tried to google it but seems like not many people are facing the same issue as me. Luckily I found a discussion on TrueNAS forum, and it leads me to some clues: https://www.truenas.com/community/threads/sonarr-radarr-probably-other-arr-jails-unable-to-verify-ssl-certificates-after-latest-update.96008/

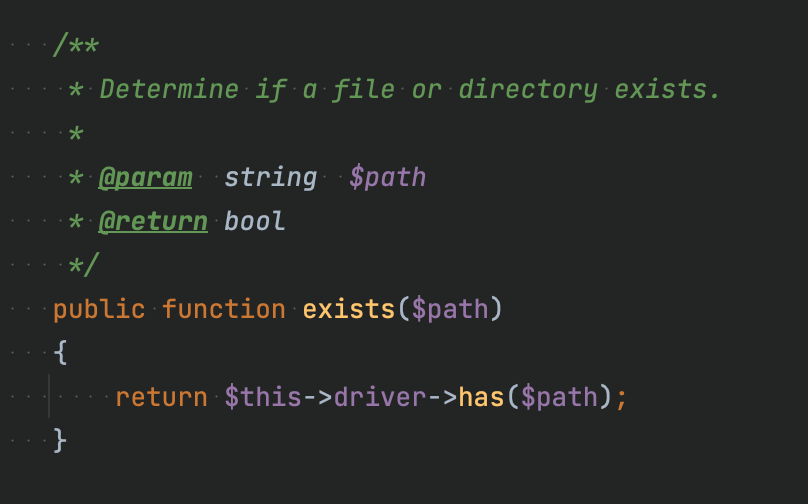

According to the post, this is a known issue that FreeBSD’s Mono 6.8 package is missing its root CA store. What we going to do is manually download the root CA and sync it to the jail.

And following is my solution:

# get into the shell of the jail

# 5 is my sonarr jail ID

# if you don't know your jail ID, run `jls`

jexec 5 sh

# install the useful wget command in order to download file

pkg install wget

# download the root CA and pipe it to cert-sync

wget -O - https://curl.haxx.se/ca/cacert.pem | cert-sync --user /dev/stdin

# until this stage, you need to exit the jail and stop it. #

# execute following command on your TrueNAS shell. (NOT jail shell)

cp -R /mnt/main-pool/iocage/jails/sonarr/root/root/.config/.mono/ /mnt/main-pool/iocage/releases/12.2-RELEASE/root/usr/share/.monoNotes for the last command:

1. replace main-pool with your pool’s name.

2. replace sonarr with your jail’s name.

3. replace 12.2-RELEASE with the FreeBSD release that your jail using.

That’s all. Restart your sonarr jail and try to add new series again, it should work now.

Leave a Comment